In today’s rapidly evolving technological landscape, businesses are increasingly turning to cloud services for their infrastructure needs. Amazon Web Services (AWS) stands out as a frontrunner in this domain, offering a vast array of services to cater to diverse requirements. However, managing AWS infrastructure efficiently can be a daunting task without the right tools and strategies in place. This is where Terraform, an infrastructure as code tool, comes into play, enabling users to define and provision infrastructure resources in a declarative manner.

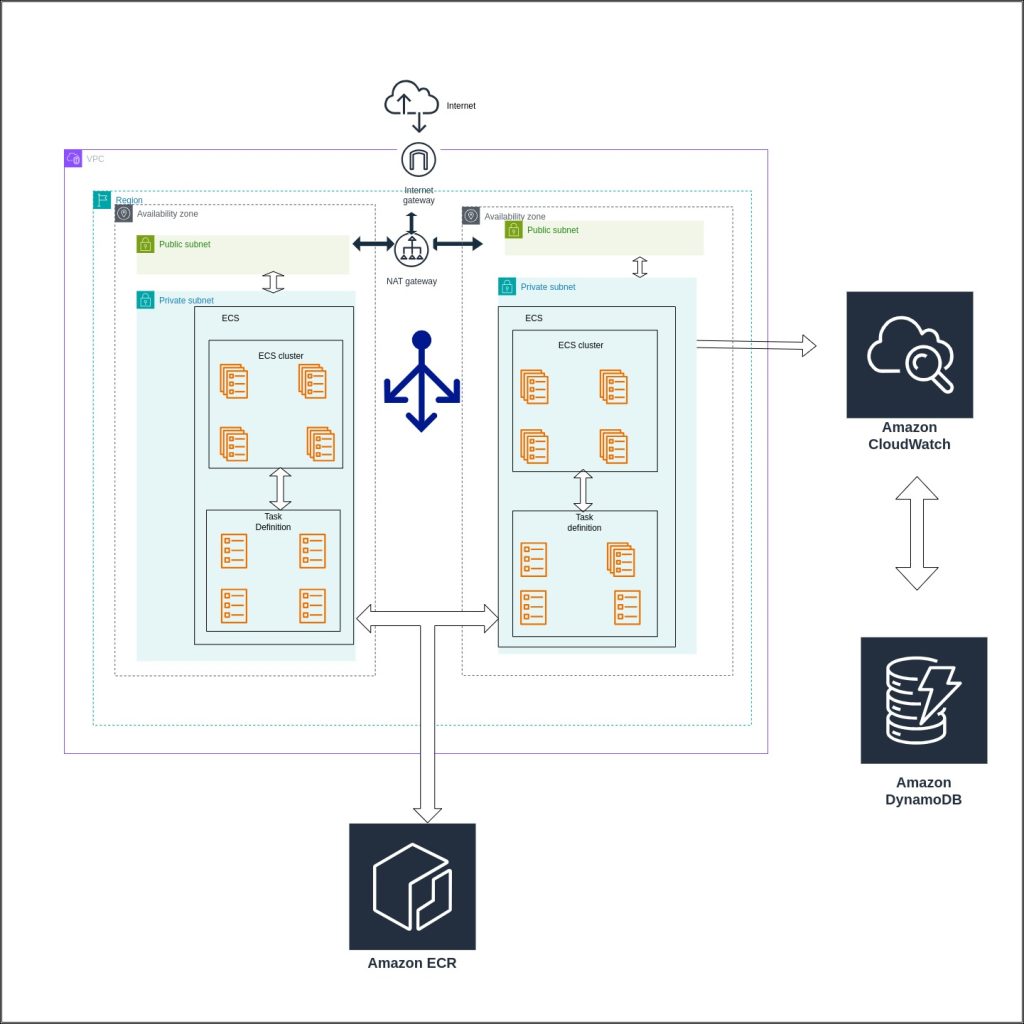

In this blog post, we’ll explore the process of establishing a resilient AWS infrastructure using Terraform. We’ll cover essential components such as VPC, ECR, ECS Fargate, ECS services, task definitions, load balancers, CloudWatch dashboards for monitoring, DNS records, and DynamoDB for database management. Additionally, we’ll discuss Terraform workspace management and implementing state file locking using an S3 bucket and DynamoDB table

Setting the Stage: Terraform and AWS

Terraform simplifies infrastructure management by allowing users to define infrastructure as code using a declarative configuration language. This approach offers several advantages, including repeatability, version control, and ease of collaboration.

AWS provides a comprehensive suite of cloud services, making it an ideal choice for hosting various types of applications. By leveraging Terraform with AWS, users can automate the provisioning and management of AWS resources, thereby streamlining the infrastructure deployment process.

Understanding ECS Fargate

ECS Fargate operates within the broader context of Amazon ECS (Elastic Container Service), a fully managed container orchestration service. While ECS allows users to manage clusters of EC2 instances to host their containers, ECS Fargate takes this a step further by abstracting away the need to provision and manage EC2 instances directly. Instead, Fargate provisions and manages the underlying compute resources automatically, allowing users to run containers without having to think about the underlying infrastructure.

ECS Fargate Services

In ECS, a service is a logical grouping of tasks that ensures a specified number of tasks are running and maintains them in the face of failures or scaling events. ECS services allow you to define how many tasks should be running at any given time and how they should be distributed across your cluster.

With ECS Fargate, creating a service involves defining a task definition (which specifies how the container should be run) and configuring the desired number of tasks to run. ECS Fargate takes care of the rest, including provisioning the necessary resources and scheduling tasks onto the available infrastructure.

Task Definitions

Task definitions are at the core of ECS. They define the configuration of containers that will be launched as tasks within ECS services. A task definition includes details such as:

- Container Image: The location of the container image to use for the task.

- CPU and Memory: The amount of CPU and memory allocated to each container.

- Networking: How the containers should be networked, including port mappings and networking mode.

- Volumes: Any data volumes that should be mounted into the containers.

- Container Definitions: Configuration details for each container, such as environment variables, command to run, and resource limits.

Task definitions provide a blueprint for how ECS should run containers within your service. They allow for flexibility and customization, enabling you to define the exact requirements and configurations for your containerized applications.

Benefits of ECS Fargate

- Simplicity: ECS Fargate abstracts away the complexity of managing infrastructure, allowing developers to focus on building and deploying applications.

- Scalability: Fargate automatically scales resources based on application demand, ensuring optimal performance and resource utilization.

- Cost-Efficiency: With Fargate, you only pay for the resources consumed by your tasks, eliminating the need to provision and maintain idle infrastructure.

- Security: Fargate provides a secure environment for running containers, with built-in isolation and fine-grained access controls.

We Will Utilize AWS Services for Our Deployments and Create Them Using Terraform

1. Virtual Private Cloud (VPC)

The VPC serves as the foundation for the AWS infrastructure, providing isolated virtual networks for hosting resources securely. With Terraform, defining a VPC along with subnets, route tables, and internet gateways becomes straightforward, ensuring proper network segregation and connectivity.

resource "aws_vpc" "main" {

cidr_block = "10.0.0.0/16"

enable_dns_support = true

enable_dns_hostnames = true

}

resource "aws_subnet" "public" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.1.0/24"

availability_zone = "us-east-1a"

}

2. Elastic Container Registry (ECR)

ECR facilitates the storage, management, and deployment of Docker container images. Integrating ECR with Terraform allows for the creation and configuration of repositories to store container images securely, enabling seamless integration with container orchestration services.

resource "aws_ecr_repository" "example" {

name = "my-app"

}3. ECS Fargate

ECS (Elastic Container Service) Fargate offers serverless container management, allowing users to run containers without managing the underlying infrastructure. Terraform simplifies the provisioning of ECS clusters and Fargate tasks, enabling efficient deployment and scaling of containerized applications.

resource "aws_ecs_cluster" "example" {

name = "my-cluster"

}

resource "aws_ecs_task_definition" "example" {

family = "my-task"

network_mode = "awsvpc"

cpu = "256"

memory = "512"

}

resource "aws_ecs_service" "example" {

name = "my-service"

cluster = aws_ecs_cluster.example.id

task_definition = aws_ecs_task_definition.example.arn

desired_count = 1

}

4. Load Balancers

Load balancers play a crucial role in distributing incoming traffic across multiple targets to ensure high availability and scalability. Terraform enables the creation of Application Load Balancers (ALB) or Network Load Balancers (NLB) along with listeners, target groups, and routing rules, facilitating seamless traffic management for ECS services.

resource "aws_lb" "example" {

name = "my-load-balancer"

internal = false

load_balancer_type = "application"

subnets = [aws_subnet.public.id]

tags = {

Name = "my-load-balancer"

}

}5. CloudWatch Dashboards

Monitoring infrastructure performance and health is essential for ensuring optimal operation. With Terraform, users can define CloudWatch dashboards to visualize key metrics and alarms, enabling proactive monitoring and alerting for AWS resources.

resource "aws_cloudwatch_dashboard" "example" {

dashboard_name = "my-dashboard"

dashboard_body = jsonencode({

widgets = [

{

type = "metric",

x = 0,

y = 0,

width = 12,

height = 6,

properties = {

metrics = [

["AWS/EC2", "CPUUtilization", "InstanceId", "i-1234567890abcdef0"]

],

period = 300,

title = "EC2 CPU Utilization"

}

}

]

})

}6. DNS Records

DNS (Domain Name System) records are essential for mapping domain names to IP addresses and enabling access to applications via user-friendly URLs. Terraform simplifies the configuration of DNS records using services like Route 53, allowing for seamless domain management and resolution.

resource "aws_route53_zone" "example" {

name = "example.com"

}

resource "aws_route53_record" "example" {

zone_id = aws_route53_zone.example.zone_id

name = "www.example.com"

type = "A"

ttl = "300"

records = ["1.2.3.4"]

}7. DynamoDB

DynamoDB is a fully managed NoSQL database service offered by AWS, ideal for scalable and high-performance applications. Leveraging Terraform, users can provision DynamoDB tables along with required attributes, capacity settings, and indexes, facilitating efficient data storage and retrieval.

resource "aws_dynamodb_table" "example" {

name = "my-table"

billing_mode = "PROVISIONED"

read_capacity = 10

write_capacity = 10

attribute {

name = "id"

type = "S"

}

key_schema {

attribute_name = "id"

key_type = "HASH"

}

}Understanding Terraform Workspaces

Terraform workspaces provide a powerful mechanism for managing multiple environments within a single Terraform configuration. Each workspace maintains its own set of state files, allowing users to isolate configurations for different stages such as development, staging, and production. This enables teams to work on independent infrastructure changes concurrently without interference, promoting agility and reducing risk.

Benefits of Terraform Workspaces:

- Isolation: Workspaces ensure that changes made in one environment do not affect others, minimizing the risk of unintended consequences.

- Simplified Workflow: Teams can work on different features or environments simultaneously, speeding up development cycles.

- Enhanced Collaboration: Workspaces facilitate collaboration by providing a structured approach to managing infrastructure configurations across teams.

Setting Up Terraform Workspaces

# Initialize Terraform workspace

terraform workspace new dev

# Switch to a different workspace

terraform workspace select prod

# List available workspaces

terraform workspace listStoring Backend Configurations on S3

Amazon S3 (Simple Storage Service) serves as a reliable and scalable storage solution for Terraform backend configurations. By storing state files remotely on S3, teams can ensure data durability, accessibility, and versioning. Additionally, leveraging S3 enables seamless integration with other AWS services and security features such as encryption and access control policies.

terraform {

backend "s3" {

bucket = "srpay-state-locking-bucket"

key = "terrafrom.tfstate"

region = "me-central-1"

dynamodb_table = "terraform-locks"

encrypt = true

}

}Configuring State File Locking with DynamoDB

DynamoDB offers a robust solution for implementing state file locking in Terraform, preventing concurrent modifications to the same state file. By utilizing DynamoDB as a locking mechanism, teams can mitigate the risk of conflicting operations and maintain consistency across deployments. This ensures that only one user or process can modify the state file at any given time, reducing the likelihood of errors and conflicts.